Fundamentals

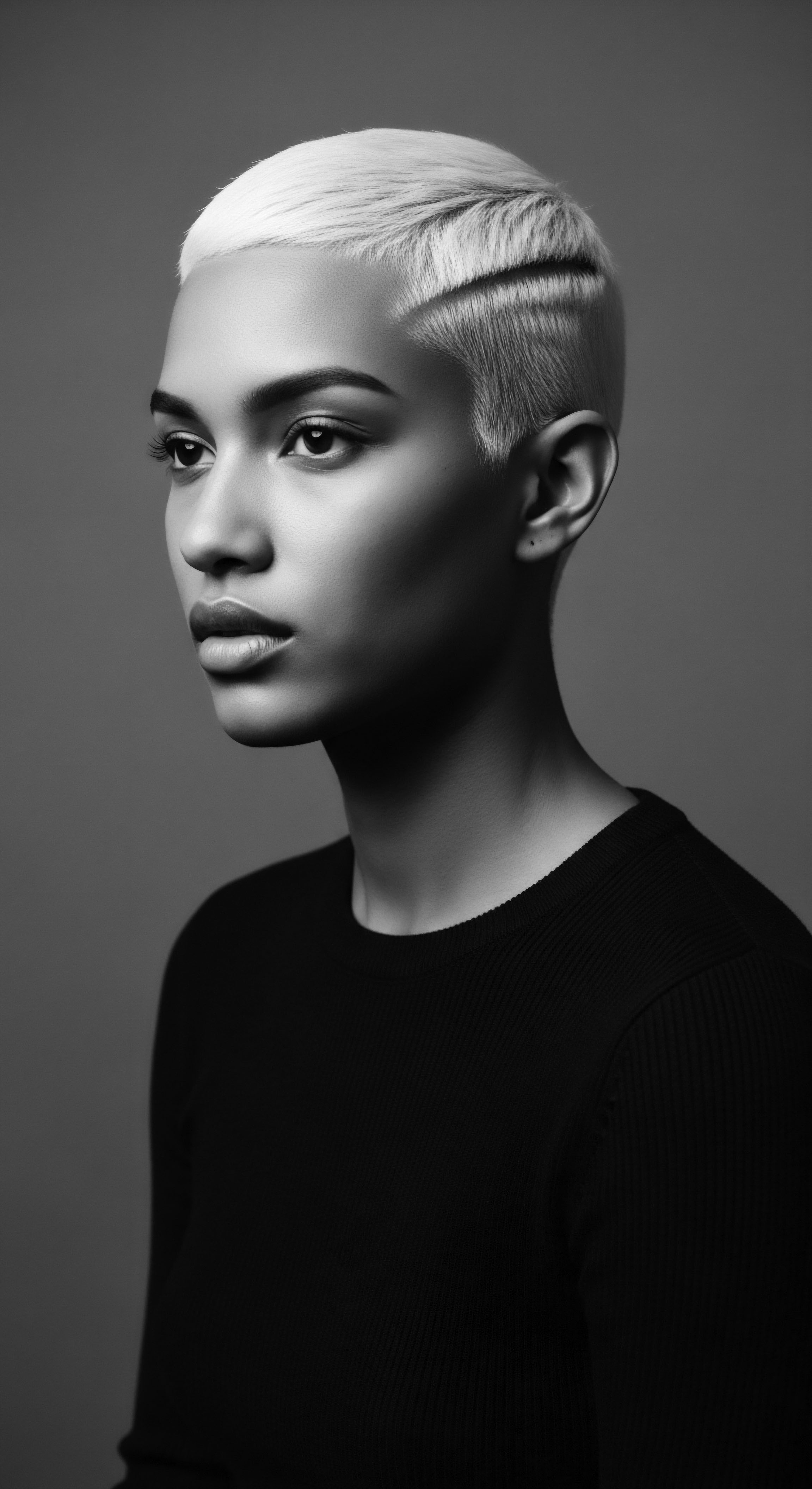

The digital age, with its rapid advancements in artificial intelligence, presents both marvel and challenge. For those of us steeped in the ancestral traditions of hair and its profound significance, we perceive a new dimension of concern: the meaning of Bias in AI Visuals. This phenomenon, at its simplest, refers to the systematic inaccuracies or skewed representations found in visual content generated or processed by artificial intelligence systems. Imagine a rich tapestry woven with vibrant colors and textures, yet some threads are consistently dulled, distorted, or altogether absent.

That is a visual analogy for this bias. It happens when the machine intelligence, learning from vast collections of images, inadvertently replicates or even amplifies societal prejudices present in its training data. The data, the very sustenance of these systems, often reflects a world where certain appearances are overrepresented, while others, particularly those outside dominant cultural norms, remain underrepresented or are depicted stereotypically. This leads to an interpretation or a creation of visuals that do not mirror the genuine diversity of human experiences and forms.

The core difficulty lies in how AI learns. These systems operate on probabilities derived from the patterns they observe in their training datasets. If a certain type of hair, skin tone, or facial feature is less common or misrepresented in the input, the AI’s output will naturally reflect this imbalance. It struggles to create what it has rarely seen, or it defaults to generalizing based on the majority.

This is not a deliberate act of exclusion on the part of the machine, but rather an outcome of the mirrors we hold up to it. The inherent biases embedded in historical visual archives, from early photography to modern digital collections, become absorbed by the AI, which then reflects these imperfections back to us, often with an amplified intensity.

Bias in AI visuals stems from incomplete or skewed data, causing digital misrepresentation of diverse appearances.

For communities where hair holds deep cultural and historical significance, such as Black and mixed-race communities, this issue holds particular gravity. Our hair, with its coils, curls, waves, and protective styles, carries centuries of ancestral stories, resilience, and beauty. When AI fails to recognize the nuances of a bantu knot, misidentifies locs, or renders textured hair as an amorphous mass, it is not merely a technical glitch.

It represents a digital erasure, a continuation of historical marginalization where our visual heritage is overlooked or misunderstood. The impact extends beyond mere aesthetics, reaching into realms of identity, self-perception, and the very visibility of our histories.

Echoes from the Source: How AI Learns

Artificial intelligence models, particularly those designed for visual tasks, learn through exposure to immense volumes of data. They absorb patterns, correlations, and relationships from these digital libraries. When we consider how these systems are trained, we might think of an eager apprentice, learning about the world solely from the books provided. If those books, however, predominantly illustrate a single type of hair texture, or if they portray other textures only in limited, often stereotypical ways, the apprentice’s understanding of hair will be skewed.

This is the root of visual bias. The datasets, often compiled from vast swathes of internet imagery, carry the societal prejudices of the real world. A lack of diversity among those who compile these datasets can lead to the AI “learning” biased patterns. For instance, if the training images disproportionately feature straight hair, the AI will default to that form, perceiving it as the norm.

The very concept of what is considered “normal” in visual data can be an inherited problem. Centuries of art, photography, and media production have often centered specific beauty standards, inadvertently pushing others to the periphery. When this historical visual legacy is digitized and fed into AI systems, these historical imbalances become hardcoded into the new digital intelligence. This means that AI does not start from a neutral ground; it inherits a legacy of visual preference and omission.

- Data Gaps ❉ AI models are only as robust as the data they receive. Significant gaps exist where specific hair textures, skin tones, or cultural appearances are absent or sparsely represented.

- Algorithmic Generalization ❉ When confronted with limited examples, AI tends to generalize, creating generic representations that fail to capture the specific beauty and variety of textured hair.

- Reinforcement Loops ❉ As more AI-generated content enters the digital realm, if left unchecked, it can perpetuate the existing biases, creating a self-reinforcing cycle of visual misrepresentation.

The Absent Strand: Early Visual Technologies

The challenges presented by AI visuals are not entirely new; they echo a longer history of visual technologies failing to accurately capture diverse human appearances. Early photographic processes, for example, were calibrated for lighter skin tones, struggling to render darker complexions with clarity and depth. The “Shirley Card,” a standard reference used in photo labs for color calibration, frequently featured a white model, thereby establishing white skin as the baseline for all color film (Roth, 2009). This historical precedent of a “default” human image, designed without consideration for the spectrum of global humanity, created a legacy of misrepresentation.

This historical oversight extended beyond skin tone to hair. The physics and aesthetics of textured hair, with its unique light absorption and curl patterns, were often poorly understood or deliberately ignored in mainstream visual media. This historical context provides a crucial backdrop for understanding contemporary AI visual bias. The “digital divide” in representation is not merely a byproduct of modern algorithms; it is a direct descendant of analog limitations and cultural preferences that favored certain physiognomies.

Intermediate

Moving beyond the foundational understanding, the intermediate meaning of Bias in AI Visuals delves into the mechanisms by which these disparities are not only learned but also amplified and perpetuated within complex systems. It involves a deeper look into the intricate workings of the algorithms themselves and the broader societal implications of their biased outputs. The problem extends past simply not seeing; it becomes about seeing in a distorted, often damaging, way. This algorithmic gaze, shaped by skewed data, can inadvertently reinforce harmful stereotypes, particularly for individuals with textured hair.

When generative AI attempts to create images, it draws from the patterns it has absorbed. If its training data primarily shows individuals with straight hair in “professional” settings, and textured hair in “ethnic” or “informal” contexts, the AI will learn to associate these visual cues. This means that a prompt seeking an image of a “successful CEO” might yield predominantly individuals with straight hair, while a prompt for a “dancer” might frequently present someone with braids or locs, even if such explicit instructions were not provided.

This automatic categorization and stereotype propagation represent a significant challenge. The machine, in its pursuit of statistical probability, inadvertently becomes a curator of existing social biases.

Bias in AI visuals isn’t just about omission; it involves the perpetuation of stereotypes, digitally reshaping how we are seen.

Echoes in the Algorithmic Loom: How Bias Takes Form

The bias in AI visuals takes root in the very construction of the algorithms. When AI models are trained, they build internal representations of the world based on the vast input they receive. These representations, often numerical vectors, capture statistical relationships between visual features.

If the training data, for instance, contains an imbalanced representation of various hair textures, the AI’s internal model for “hair” will be skewed. This can lead to:

- Homogenization of Difference ❉ AI may struggle to differentiate between distinct textured hair types, simplifying the rich diversity of coils, kinks, and curls into a generic, often inaccurate, depiction.

- Misattribution of Characteristics ❉ Certain hair textures might be inadvertently linked to non-hair attributes like socioeconomic status, profession, or even moral character, reflecting societal prejudices present in the training data.

- Failure in Contextual Understanding ❉ AI might not recognize the cultural significance of protective styles or ceremonial hair adornments, treating them as mere visual noise or, worse, misidentifying them entirely.

The algorithms, in essence, learn the “grammar” of the visual world from their datasets. If this grammar is incomplete or biased, the language they speak back to us will also be flawed. The resulting images become reflections of the limitations of the training data, not accurate mirrors of the world’s visual variety. This cycle of flawed input leading to flawed output can have a detrimental effect on how individuals perceive themselves and are perceived by others.

The Unseen Crown: Real-World Consequences for Textured Hair

The consequences of biased AI visuals extend beyond the digital realm, casting shadows upon the lived experiences of individuals with textured hair. When AI-generated images fail to accurately represent Black and mixed-race hair, or worse, perpetuate derogatory stereotypes, it contributes to a feeling of digital erasure. Consider the impact on young individuals seeking visual affirmation of their beauty in online spaces. If AI systems consistently generate images that do not reflect their natural hair, it can reinforce societal beauty standards that exclude them.

This lack of authentic representation can lead to practical limitations as well. Imagine virtual try-on applications for hairstyles or beauty products that do not cater to the unique characteristics of textured hair, leading to inaccurate simulations or complete omissions. Or, consider how AI-powered image analysis tools, used in various industries, might misclassify or overlook individuals based on their hair, thereby affecting everything from professional opportunities to personal safety. The invisibility in digital spaces can translate into real-world disadvantages.

The concern stretches to how AI might shape perceptions of professionalism. If AI models are trained on datasets where “professional” appearances are overwhelmingly depicted with straight hair, then individuals with natural textured hair might be subtly, or overtly, marginalized in visually mediated contexts, like video interviews or online profiles. The subtle algorithmic suggestions can carry significant social weight.

Beyond the Pixel: Emotional Resonance

Hair is not merely an aesthetic feature; it is a repository of identity, memory, and community for many Black and mixed-race people. The lack of accurate portrayal in AI visuals, therefore, impacts not just pixels, but souls. When an ancestral practice, like the careful braiding of hair that has been passed down through generations, is either ignored or rendered incorrectly by AI, it diminishes its recognized value in the digital sphere. This can create a sense of disconnect for individuals who seek to see their authentic selves reflected in the evolving digital landscape.

The yearning for visual affirmation is a deeply human need, and when technology falls short, it leaves a void. The misrepresentation contributes to what some scholars term “digital dehumanization,” where the very human aspects of one’s identity are overlooked or simplified by algorithmic processes.

Academic

The systematic prejudice manifest in AI visuals, known as Bias in AI Visuals, comprises a complex phenomenon rooted in the statistical foundations and socio-technical design of machine perception and generation systems. This includes the unintentional yet pervasive skewing of visual outcomes, particularly in image recognition, classification, and generative synthesis, which privileges certain demographic characteristics while marginalizing others. Its scholarly explication involves scrutinizing the underlying datasets, the algorithmic architectures, and the socio-historical contexts that inform both.

This particular delineation of AI bias moves beyond superficial errors; it concerns the reification of pre-existing societal inequities within ostensibly objective computational frameworks, thereby creating what some researchers term a “new Jim Code” (Noble, 2018). The core of this issue lies in the predictive power of AI models, which, when fed historical data reflecting exclusion, will continue to predict and produce exclusionary representations.

Central to understanding this problem is the observation that AI models operate on vast repositories of visual information, often scraped from the internet without comprehensive auditing for representational equity. The probabilistic outputs of these models reflect the statistical prevalence and dominant categorizations within their training data. This mechanism means that visual patterns associated with Black and mixed-race individuals, especially those related to textured hair, which has historically been underrepresented or negatively stereotyped in mainstream media, receive inadequate computational weight. Consequently, when AI is prompted to create or interpret images involving these groups, its reliance on skewed data leads to inaccuracies, homogenization, or outright misrepresentation.

Bias in AI visuals means computational systems replicate societal prejudices, often stemming from skewed training data and historical omissions, leading to misrepresentation.

The Algorithmic Ancestor: Tracing Bias through Data Lineages

The genesis of visual bias in AI models often resides within the ancestral data upon which they are built. These immense collections, typically gathered from disparate internet sources, inadvertently carry the vestiges of historical and cultural biases. For example, legacy media ❉ photographs, films, and digital archives ❉ have historically underrepresented individuals with darker skin tones and textured hair, or depicted them in stereotypical ways (Roth, 2009; Gilliard, 2019). When these datasets become the training grounds for AI, the algorithms inherit these imperfections, learning a skewed ‘reality’ of human appearance.

The concept of “default” in computer graphics, for instance, has long centered on Eurocentric skin tones and hair textures, influencing how AI is trained to process and generate human forms (Kim, 2022). This structural prejudice, baked into the very fabric of data collection, means that the AI’s “vision” of the world is inherently limited by the narrow lens of its heritage.

This phenomenon extends to the very classification systems used to label data. If hair types are simplified, or if specific Black or mixed-race hairstyles are not distinctly categorized or are mislabeled within datasets, the AI will fail to recognize them authentically. This leads to what researchers call “racial homogenization” within AI-generated imagery, where the nuanced diversity of racial groups is flattened into a generic, often inaccurate, depiction (Tadesse et al.

2022). This problem is not simply one of quantity, but of quality and specificity within the data.

Generative AI and the Homogenization of Textured Hair

Generative AI models, such as those that convert text prompts into images, vividly illustrate this concern. These tools, which have found widespread application, frequently struggle with accurate portrayals of Black and mixed-race individuals, especially concerning their hair. Minne Atairu, an artist and researcher, meticulously documented this challenge in her work with Midjourney, a generative AI program.

Her “Cornrow Studies” from 2024 revealed instances where the AI produced images of dark-skinned Black women with cornrows that appeared “too deep on the forehead” or “rested atop the head instead of being braided into hair strands,” sometimes even resembling an “atomic wig” (Atairu, 2025). This practical observation demonstrates how the AI, despite explicit prompts, defaults to an inaccurate understanding of textured hair morphology and styling.

The phenomenon Atairu observed is not an isolated incident. More broadly, generative AI models, when prompted to create images of people of color, frequently default to homogenized or stereotypical hair textures. These models often fail to accurately portray the vast spectrum of Black and mixed-race hair heritage, including intricate braids, locs, or complex natural coil patterns (Tadesse et al. 2022).

This computational oversight effectively forces a visual assimilation into Eurocentric hair norms, erasing centuries of cultural expression held within varied textures and styles. The systems, trained on datasets with limited diversity, lack the granular data necessary to authentically render such hairstyles, leading to visual artifacts that betray a fundamental misunderstanding of their structure and presence.

The impact of this visual homogenization extends beyond mere aesthetic critique. It perpetuates a digital landscape where authentic representations of textured hair are either absent or distorted. This reinforces a societal message that certain hair types are “other” or “unconventional,” challenging the self-acceptance and cultural affirmation of those whose hair falls outside the narrow AI-generated norm. The challenge is not only to diversify the training data but to cultivate an algorithmic understanding that appreciates the biophysical complexities and cultural significance of textured hair.

The Silence in the Pixels: Quantitative Evidence of Visual Exclusion

The pervasive nature of visual bias is supported by quantitative analyses of AI system performance. A foundational study by Buolamwini and Gebru (2018), while primarily examining gender classification, revealed significant accuracy disparities across darker skin tones, particularly for women. Though not directly focused on hair, this work highlighted a systemic issue in facial analysis algorithms: their diminished performance on underrepresented groups.

This deficiency in recognizing diverse physiognomies inevitably extends to hair, given its integral connection to facial appearance and identity. The struggle of these systems to accurately identify individuals with darker complexions often correlates with their inability to correctly process and generate associated hair textures.

Research consistently shows that AI facial recognition algorithms perform less accurately on females and individuals with darker skin tones (Grother et al. 2019). While studies have explored how facial hair and hairstyles can influence recognition accuracy, suggesting that variations in male facial hair might account for some observed gender differences in performance, the underlying problem of data imbalance remains. For instance, the “Gender Gap in Face Recognition Accuracy Is a Hairy Problem” discusses how different distributions of facial hairstyle across demographics can create a false impression of relative accuracy (Ozturk et al.

2024). They found that even when larger training sets are used, accuracy variations caused by hairstyles can persist. This underscores that simply increasing data quantity is not enough; the data must possess balanced representation across diverse attributes, including hair textures and styles, to truly mitigate bias.

Generative AI models often produce homogenized or stereotypical textured hair, digitally assimilating complex Black hair heritage into Eurocentric norms.

Reclaiming the Gaze: The Call for Culturally Attuned AI

Addressing Bias in AI Visuals requires a multi-pronged approach that extends beyond technical corrections to encompass a cultural and ethical restructuring of AI development. It necessitates a shift towards culturally attuned AI, where the design, training, and deployment of visual systems actively prioritize inclusivity and accurate representation. This means moving away from a “universal” human model that implicitly defaults to dominant demographic traits and instead building systems that genuinely understand and value the full spectrum of human appearances, including the rich diversity of textured hair.

One crucial step involves the intentional curation of more inclusive datasets. This means actively seeking out and incorporating images that authentically represent various hair textures, styles, and skin tones, especially those historically marginalized. Efforts like the Open Source Afro Hair Library (OSAH) are exemplary in this regard, providing a free database of 3D images of Black hair created by Black artists to counter the bias in computer graphics technology (Darke, 2025). Such initiatives are not simply about adding more data; they are about adding data with specific cultural knowledge and intent, ensuring that the AI learns from a source that honors heritage.

Another critical aspect involves developing algorithmic architectures that are more robust to variations in appearance. This could include designing models that do not rely on a singular “ideal” representation, but rather learn to process and generate a wide array of visual characteristics with equal proficiency. Researchers are exploring methods for simulating human hair that encompass all types, moving away from historically biased standards of beauty (Kim, 2022). This scientific endeavor, coupled with cultural sensitivity, points towards a future where AI can accurately depict the intricate physics and aesthetics of textured hair, from tight coils to flowing locs.

Furthermore, the ethical framework surrounding AI development must be centered on principles of fairness and equity. This requires a diverse group of creators and researchers in the AI field who bring varied perspectives and lived experiences to the design process. When the developers themselves possess a deep understanding of varied cultural appearances, the likelihood of unintentional bias diminishes. This approach, sometimes termed “Afrocentricity in AI,” seeks to embed African history, culture, and values as a foundation for understanding present and future experiences, guiding AI tools towards more equitable outcomes (Igbinedion, 2024).

The Path Forward: Co-Creation and Ethical Stewards

The journey towards a more equitable visual AI landscape requires collaboration and a commitment to shared responsibility. It calls for technologists to work hand-in-hand with cultural experts, historians, and community members to ensure that AI systems are not only technically proficient but also culturally competent. This co-creation model ensures that traditional knowledge and lived experiences directly inform the development of future visual AI.

Specific steps include:

- Inclusive Dataset Development ❉ Actively compiling and vetting datasets that are balanced across diverse demographic groups, specifically prioritizing hair textures and styles, skin tones, and facial features that have been historically excluded.

- Algorithmic Auditing for Bias ❉ Regularly and rigorously testing AI visual models for discriminatory outputs, particularly against marginalized communities, and implementing corrective measures based on these assessments.

- Culturally Literate AI Training ❉ Incorporating experts in cultural studies, anthropology, and heritage into the AI training process, ensuring that the systems learn not just pixels, but the historical and cultural significance of various appearances.

- Transparent Model Design ❉ Working towards greater transparency in AI model development, allowing for public scrutiny and accountability regarding potential biases in visual outcomes.

- Community Feedback Loops ❉ Establishing mechanisms for continuous feedback from affected communities, ensuring that their experiences and perceptions of AI-generated visuals directly inform ongoing improvements.

The implications for human identity and well-being are substantial. When AI can accurately reflect the beauty of diverse textured hair, it affirms belonging, counters historical marginalization, and fosters a sense of pride. When it fails, it risks deepening existing divides and perpetuating the painful legacy of visual exclusion. The conscientious creation of AI visual systems, therefore, becomes an act of ancestral honoring, safeguarding the visual heritage of all people for generations to come.

Reflection on the Heritage of Bias in AI Visuals

The journey through the landscape of Bias in AI Visuals brings us to a contemplative clearing, where the echoes of ancestral wisdom mingle with the hum of future possibilities. Our textured hair, a living archive of generations, carries within its coils and strands stories of resilience, adornment, and identity. When we speak of bias in the artificial gaze, we are speaking of its capacity to dim the light on these sacred histories, to flatten the vibrant contours of our lived experiences into a homogenous, often unrecognizable, digital form.

The challenge laid bare by AI visuals is not simply a technical hurdle for algorithms to overcome; it represents a profound moral and cultural imperative. We are summoned to ensure that the evolving digital mirror reflects the full, authentic spectrum of human beauty, particularly that which has been marginalized for centuries.

The meticulous braiding of a cornrow, the careful cultivation of a loc, or the joyous expression of an afro are not arbitrary styles; they are living testaments to creativity, resistance, and connection to a lineage that survived unimaginable hardships. For AI to misapprehend these expressions, to render them inaccurately or to ignore them entirely, is to digitally mimic a historical erasure. It is a reminder that technology, while offering boundless potential, also carries the imprints of its human creators and the societies they inhabit. If those societies hold unexamined biases, the technology will, in turn, replicate them with unnerving precision.

Our focus, as stewards of hair knowledge and ancestral wisdom, must center on the intentional cultivation of digital environments where our heritage is seen, understood, and celebrated. This means advocating for datasets that are rich with the diversity of textured hair, for algorithms that learn the profound physics of a coil as readily as a straight strand, and for systems that honor the cultural weight of each style. It calls upon us to recognize that the pursuit of technological advancement must walk hand-in-hand with ethical consideration and a deep respect for the human spirit, manifested so beautifully in our crowns. The spirit of a strand, after all, holds the wisdom of countless sunrises and sunsets, a living testament to continuity, identity, and the enduring beauty of who we are.

References

- Atairu, M. (2025). How Can Synthetic Images Render Blackness? Aperture.org.

- Buolamwini, J. & Gebru, T. (2018). Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. Proceedings of the Conference on Fairness, Accountability, and Transparency.

- Darke, A. M. (2025). Researchers Create Algorithms To Transform Representation Of Black Hair In Computer Graphics And Media. AfroTech.

- Gilliard, C. (2019). The (In)visibility of Blackness: Digital Humanities and the Problem of Race. Journal of Futures Studies, 24(2), 27-36.

- Grother, P. Ngan, M. & Hanaoka, K. (2019). Face Recognition Vendor Test (FRVT) Part 3: Demographic Effects. National Institute of Standards and Technology.

- Igbinedion, I. (2024). Why Black Women Will Want to Use This AI-Powered Wig Platform. digitalundivided.

- Kim, T. (2022). Yale professors confront racial bias in computer graphics. Yale Daily News.

- Noble, S. U. (2018). Algorithms of Oppression: How Search Engines Reinforce Racism. New York University Press.

- Ozturk, K. Wu, H. & Bowyer, K. W. (2024). Can the accuracy bias by facial hairstyle be reduced through balancing the training data? arXiv preprint arXiv:2405.19799.

- Roth, L. (2009). Looking at Shirley, the Ultimate Norm: Colour Balance and Racial Bias in Photography. Loving and Hating Hollywood: The Cinema and Cultural Studies Reader, 117-133.

- Tadesse, M. G. Zewge, T. B. & Abraham, R. (2022). The Problematic Pitfalls of Generative Models for People of Color. AI and Society, 37(2), 527-540.