Fundamentals

The spirit of Algorithmic Fairness, when viewed through the sacred lens of textured hair heritage, finds its earliest echoes in the elemental recognition of inherent worth and the right to equitable treatment. At its most fundamental, Algorithmic Fairness speaks to the principle of impartiality, ensuring that systems designed for decision-making or classification do not inadvertently, or by design, disadvantage specific groups. It is a commitment to ensuring that automated processes, those digital threads woven into the fabric of our contemporary lives, extend the same understanding, the same respect, and the same opportunities to all, without the subtle or overt biases that have historically shadowed human interactions.

Across ancient traditions, from the sun-drenched plains of Africa to the vibrant archipelagos, communities understood that the practices of care, the distribution of sustenance, and the sharing of wisdom required an even hand. This innate understanding, a kind of primal fairness, ensured that no one was left behind due to the shade of their skin, the curl of their coil, or the rhythm of their walk. It was a societal algorithm of sorts, organically built upon principles of communal wellbeing, where the wisdom of elders guided the young, and where the uniqueness of each individual was seen not as a deviation but as a contributing note in the grand symphony of shared existence. Our ancestors, in their intuitive wisdom, practiced a form of fairness that sought balance and reciprocity.

In modern times, as digital constructs increasingly mediate our experiences, the simple meaning of Algorithmic Fairness becomes a renewed call to this ancestral balance. It asks that the complex computational sequences powering our world do not replicate or deepen the inequities of the past. The intention is clear: to ensure these digital architects treat every individual, every curl pattern, every melanin-rich complexion, with the same fidelity and discernment.

Algorithmic Fairness, at its foundation, insists that digital systems operate with an impartiality that mirrors the communal wisdom of ancestral traditions, acknowledging and valuing the inherent worth of every individual.

Roots of Impartiality

The concept of Algorithmic Fairness draws directly from age-old principles of equitable distribution and just consideration. In the tender ritual of communal hair braiding, for instance, there is an inherent fairness in the distribution of attention, the shared knowledge of knotting patterns, and the careful selection of nourishing oils. Each head, each strand, receives devoted handling, reflecting a balance of respect and individualized care. This historical continuity helps clarify the basic meaning of Algorithmic Fairness as it translates into the digital domain.

- Intentionality in design ❉ Systems should be constructed with the deliberate goal of avoiding biased outcomes for diverse populations.

- Data representation ❉ The information upon which these systems are built must comprehensively mirror the rich diversity of humanity.

- Equitable outcomes ❉ The decisions or classifications produced by algorithms must not disproportionately harm or favor particular groups.

Simple Interpretations for a Complex World

For those beginning to understand these digital systems, Algorithmic Fairness means asking whether a computer program, like a village elder, acts with wisdom and without prejudice. Does it see the myriad expressions of beauty within textured hair, or does it only recognize a narrow, predefined standard? The clarification here rests on making the invisible workings of these digital tools approachable, allowing us to question their influence on our daily lives.

Consider a simple application designed to recommend hairstyles. If its underlying data mostly featured straight hair or very loose curl patterns, it might consistently suggest styles that are incompatible with tightly coiled textures, or it might fail to offer culturally resonant options like braids, twists, or locs. This omission, though seemingly small, creates an experience of exclusion. It becomes an interpretation of fairness that is incomplete, failing to acknowledge the full spectrum of beauty that textured hair represents.

Intermediate

Stepping beyond foundational concepts, the intermediate understanding of Algorithmic Fairness invites us to consider the deeper layers of its meaning, moving beyond simple definitions to explore how historical injustices can become embedded within digital systems. It is here that we begin to discern the often-subtle mechanisms by which inequities propagate through the intricate networks of code and data that shape our contemporary world. Algorithmic Fairness at this level becomes a more comprehensive elucidation, acknowledging the journey from broad principles to specific societal impacts, particularly as they relate to the rich tapestry of Black and mixed-race hair experiences.

The sense of Algorithmic Fairness, in this expanded view, recognizes that algorithms are not neutral entities born of pure logic. They are, in fact, reflections of human choices, historical data, and societal norms. Just as ancestral practices of hair care were shaped by climate, available resources, and cultural identity, so too are algorithms shaped by the data they are fed and the objectives they are programmed to achieve. When those inputs bear the imprint of historical bias, the output, however unintentional, will carry forward those disparities.

Algorithmic Fairness, beyond surface-level definitions, represents a critical awareness of how historical biases become encoded within digital systems, requiring a deliberate re-calibration to account for past injustices and to honor the breadth of human experience.

Encoded Legacies: Bias in the Digital Sphere

The significance of Algorithmic Fairness deepens when we consider the enduring legacy of racial bias, particularly within visual technologies. For generations, photographic and cinematic tools were calibrated to lighter skin tones, leaving darker complexions underexposed and less visible. This historical bias in image capture now finds itself mirrored in the datasets used to train contemporary artificial intelligence. As an example, the foundational research of Joy Buolamwini, a computer scientist, and Timnit Gebru, in their groundbreaking “Gender Shades” project, exposed significant disparities in facial analysis technologies.

Their 2018 study, detailed in a paper, revealed that commercial gender classification systems performed with considerably higher error rates for darker-skinned women compared to lighter-skinned men, with error rates for darker-skinned women sometimes reaching as high as 34.7% compared to less than 1% for lighter-skinned men. This is not merely a technical glitch; it is an echo of historical biases in imaging, demonstrating how AI, without deliberate intervention, can perpetuate a “coded gaze” that struggles to accurately perceive and represent diverse individuals, including those with textured hair.

The implications of this misrecognition extend to the very understanding and representation of Black and mixed-race hair. If systems struggle to properly identify a face, how then can they be expected to accurately interpret the nuances of braids, locs, or twists, or to offer relevant beauty product recommendations? This challenge requires an understanding of Algorithmic Fairness that goes beyond surface-level adjustments, demanding a deeper examination of the societal and historical foundations of the data itself.

Addressing this requires not simply removing “race” as a variable but understanding how other seemingly neutral attributes, such as lighting, background, or even hair texture, can serve as proxies for race, leading to indirect discrimination. The effort to secure Algorithmic Fairness becomes an active commitment to designing systems that are not only fair but also actively inclusive, recognizing the full spectrum of human identity.

Beyond Simple Inputs: Understanding Algorithmic Pathways

The meaning of Algorithmic Fairness further clarifies when we consider the complex pathways through which algorithms process information. It is a question of how these systems, given a set of inputs, arrive at their outputs, and whether that journey is equitable for all groups.

The Algorithmic Justice League, founded by Joy Buolamwini, highlights how biases are embedded at various stages of an AI system’s development: from its initial design to the data it’s trained on, and how it is ultimately deployed.

- Data acquisition and curation ❉ The process of collecting and preparing datasets can inadvertently exclude or misrepresent certain groups, leading to a skewed perception of reality.

- Algorithm design and objectives ❉ The very construction of the algorithm, its mathematical goals, and the “rules” it follows can prioritize outcomes that favor a dominant group.

- Evaluation and deployment ❉ How algorithms are tested and applied in the real world can reveal hidden biases that were not apparent during development, particularly if testing sets lack diversity.

Consider, for example, a beauty tech application that uses AI to analyze skin and recommend products. If its training data primarily consists of images of lighter skin tones and straighter hair types, it will inevitably perform less accurately for those with darker complexions or textured hair. This affects not only product recommendations but also virtual try-on experiences, leading to misrepresentation or outright failure to function. Such a system, despite its intent, is not fair in its functional reach or its implicit message.

The ethical considerations become paramount. Ensuring Algorithmic Fairness means committing to an iterative process of auditing, redesigning, and re-evaluating systems. It is a continuous striving to build technologies that honor the rich multiplicity of human identity, particularly for those whose beauty and experiences have been historically marginalized. This calls for a profound understanding of how data, code, and human values intertwine, forging a future where technology amplifies, rather than diminishes, the spectrum of human existence.

Academic

From an academic perspective, Algorithmic Fairness stands as a rigorous, interdisciplinary field of inquiry, meticulously scrutinizing how automated decision-making systems can, often unintentionally, perpetuate or even amplify societal biases and historical inequities. It is a profound meditation on the ethical implications of technology, requiring a precise elucidation of its complex mechanisms and their far-reaching consequences. This scholarly pursuit acknowledges that algorithms, far from being neutral mathematical constructs, are deeply embedded within social structures, inheriting and operationalizing the historical predispositions of the data they consume and the human values of their creators. The designation of Algorithmic Fairness involves a critical examination of various fairness metrics, impossibility theorems (which demonstrate that no single definition of fairness can satisfy all desirable properties simultaneously), and the practical challenges of mitigating bias in real-world applications.

The meaning of Algorithmic Fairness, in this academic context, transcends a mere technical definition; it is a conceptual framework for understanding systemic discrimination in automated processes. It explores how statistical disparities can arise even in the absence of overt discriminatory intent, a phenomenon often termed “statistical discrimination.” Patty and Penn (2022) describe Algorithmic Fairness as an area of study focused on measuring whether a process, or algorithm, might unintentionally produce unfair outcomes and how such unfairness can be mitigated. This complex interplay of data, design, and societal impact forms the bedrock of its scholarly interpretation.

Algorithmic Fairness, within academic discourse, constitutes a rigorous, interdisciplinary examination of how automated systems can perpetuate historical inequities, calling for nuanced strategies to counteract statistical discrimination and embed equitable principles into technological design.

The Inherited Gaze: Algorithmic Bias and Textured Hair

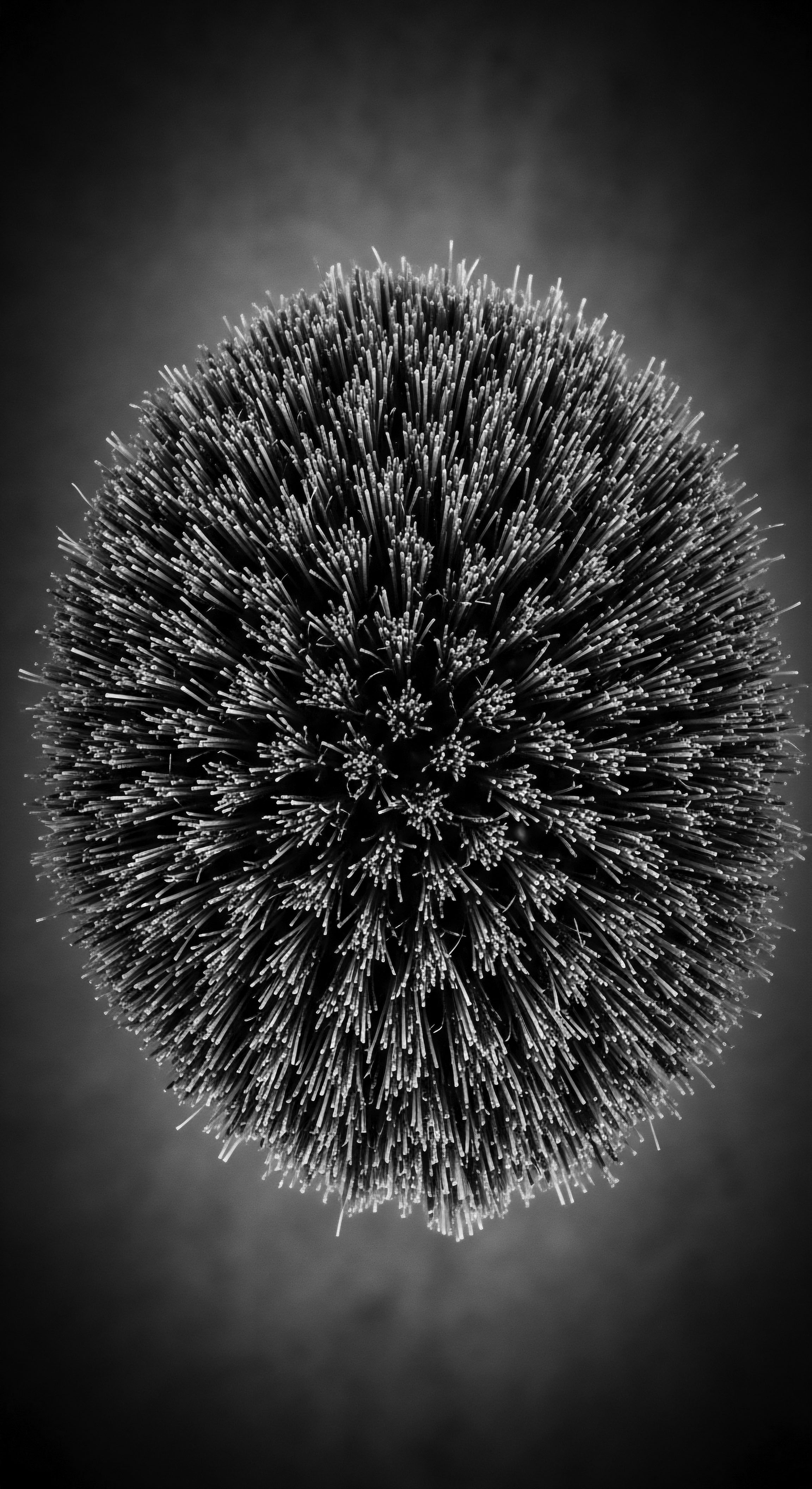

The profound connection between Algorithmic Fairness and textured hair heritage becomes strikingly clear when examining the “coded gaze,” a term coined by Joy Buolamwini. This concept underscores how artificial intelligence systems, particularly facial recognition technologies, are predisposed to biases that disproportionately affect individuals with darker skin tones and non-Eurocentric features, including the varied expressions of Black and mixed-race hair. The foundational datasets used to train these algorithms often lack diverse representation, reflecting historical photographic biases where light-skinned individuals were the photographic norm, leading to underrepresentation of Black and Brown individuals in image collections. This scarcity of comprehensive data means that when algorithms encounter textured hair, intricate braid patterns, or rich, melanated complexions, they can misinterpret, misclassify, or simply fail to “see” them accurately.

Buolamwini’s pioneering “Gender Shades” research exemplifies this critical issue. The study systematically assessed commercial facial analysis systems from prominent technology companies, revealing alarming disparities. For instance, these systems exhibited error rates as high as 34.7% for darker-skinned women when attempting gender classification, starkly contrasting with error rates below 1% for lighter-skinned men. Such discrepancies are not incidental; they speak to a fundamental flaw in the systems’ ability to accurately process and recognize the full spectrum of human diversity.

For women of African descent, whose hair is often a central marker of identity, ancestral connection, and personal expression, this algorithmic misrecognition extends beyond a mere technical inaccuracy. It becomes an act of digital erasure, mirroring historical societal failures to acknowledge and celebrate Black womanhood in its authentic form.

This algorithmic misidentification can translate into tangible harms. In contexts ranging from security systems to job applicant screening, a system’s inability to accurately process an individual with textured hair or darker skin can lead to false accusations, denied opportunities, or a pervasive sense of being digitally unseen. Ruha Benjamin (2019), in Race After Technology: Abolitionist Tools for the New Jim Code, eloquently argues that technological innovations can serve as a “New Jim Code,” subtly encoding and perpetuating racial hierarchies through their design and application.

This framework helps us understand how the algorithmic failure to properly interpret textured hair or darker skin is not merely a technical oversight but a continuation of historical patterns of racial stratification, manifesting in automated systems that appear neutral yet deliver discriminatory outcomes. The problem is exacerbated by the fact that many of these algorithms operate in opaque, “black box” manners, making it difficult to discern the sources of bias or to challenge their unfair pronouncements.

Ancestral Practices and Algorithmic Blind Spots

The ancestral knowledge of hair care, rich with its diverse styles, adornments, and rituals, stands in poignant contrast to the algorithmic systems that struggle to perceive this complexity. The communal practices of shaping and tending textured hair, passed down through generations, signify identity, status, spirituality, and resilience. These traditions embody a profound understanding of hair as a living, dynamic entity, far beyond mere aesthetics.

- Stylistic complexity ❉ Traditional Black hairstyles, such as intricate cornrows, elaborate updos, or free-flowing locs, possess geometric and textural qualities that differ significantly from straighter hair types. Algorithms trained predominantly on images of Eurocentric hair may not possess the visual vocabulary to accurately identify these distinct features.

- Dynamic presentation ❉ Textured hair is often styled in myriad ways, changing its silhouette and volume. An algorithm’s inability to account for these natural variations can lead to inconsistent recognition or misclassification, interpreting a cultural expression as an anomaly.

- Melanin’s spectral challenge ❉ The algorithms’ struggle with darker skin tones is inextricably linked to the visual challenges posed by varying melanin levels in imagery, a problem exacerbated by the lack of diverse training data. This affects the entire facial landscape, including the frame provided by textured hair.

The consequences ripple through various domains. In beauty tech, for instance, virtual try-on tools may fail to accurately superimpose hair colors or styles onto diverse complexions or hair textures. Product recommendation algorithms might default to formulations unsuitable for coiled hair, reflecting a training bias toward products designed for straight hair or looser curl patterns. This perpetuates a narrow definition of beauty, digitally reinforcing Eurocentric ideals and marginalizing the vibrant spectrum of textured hair.

Strategies for Equitable Algorithmic Delineation

Addressing algorithmic unfairness requires a multifaceted approach, drawing insights from critical race theory, sociology, and computer science. The explication of solutions often involves a combination of technical adjustments and systemic changes.

- Diversification of datasets ❉ A primary intervention involves actively curating and expanding training datasets to include truly representative samples of all demographic groups, with particular attention to varied skin tones, facial geometries, and hair textures. This necessitates ethical data collection practices and careful annotation to avoid introducing new biases.

- Development of robust fairness metrics ❉ Researchers are proposing and evaluating various mathematical definitions of fairness (e.g. statistical parity, equalized odds, predictive parity) to measure and quantify algorithmic bias. The challenge lies in selecting appropriate metrics for specific applications, recognizing that no single metric can satisfy all fairness criteria simultaneously.

- Transparent and auditable algorithms ❉ Promoting explainable AI (XAI) and requiring regular, independent audits of algorithmic systems can shed light on their internal workings, allowing for identification and remediation of biased decision pathways. This provides a mechanism for accountability.

- Interdisciplinary collaboration and diverse development teams ❉ The creation of fair algorithms necessitates collaboration between computer scientists, ethicists, social scientists, and community advocates. Diverse teams are more likely to identify and mitigate biases, as they bring varied perspectives and lived experiences to the design and development process. This echoes the holistic approach to care within ancestral communities.

- Policy and regulation ❉ Governmental and regulatory bodies are increasingly exploring frameworks to mandate algorithmic accountability and fairness, particularly in high-stakes domains such as employment, housing, credit, and law enforcement. These policies aim to create legal mechanisms for addressing algorithmic discrimination.

The delineation of Algorithmic Fairness ultimately seeks to ensure that as technology continues its rapid advancement, it does so in a manner that respects and affirms the diverse tapestry of human heritage. It is a call to align the precision of computation with the wisdom of human empathy, creating digital systems that serve as tools of equity, not instruments of inherited bias. The work continues to gain ground, driven by the persistent voices of those who demand to be fully seen and understood in every digital interaction.

Reflection on the Heritage of Algorithmic Fairness

As we journey through the intricate definitions and profound implications of Algorithmic Fairness, a resonant truth emerges: its pursuit is not merely a technical endeavor but a deep, soulful reconnection to ancient wisdom. The heart of this work beats with the rhythm of our ancestors, those who understood implicitly the inherent value of every strand, every texture, every unique crown. Our quest for fairness in digital realms is a contemporary echo of the communal spirit that nurtured Black and mixed-race hair traditions through generations, ensuring each coil and braid received bespoke attention, honored for its identity and its journey.

The tender thread that connects our past to our present is spun from shared experiences of visibility and invisibility, of recognition and erasure. For centuries, textured hair has navigated spaces that sought to diminish its splendor, to force it into narrow, Eurocentric molds. This historical suppression finds a chilling parallel in the algorithmic systems that misrecognize darker complexions or overlook the distinctive features of our hair. Yet, just as our forebears transformed adversity into resilience, cultivating practices of care that became acts of defiance and self-affirmation, so too can we guide these digital creations toward a more inclusive vision.

The unbound helix of our identity, expressed so vividly through our hair, demands that technology serve as a mirror, reflecting our full, radiant truth. This requires us to imbue artificial intelligence with the empathy and cultural competence that have always been the bedrock of our hair heritage. It means consciously feeding these systems with images that celebrate the glorious spectrum of Black and mixed-race beauty, teaching them to see not through a “coded gaze” but through eyes that appreciate the rich diversity of human expression.

The lessons from ancient hair rituals ❉ the meticulous oiling, the careful detangling, the protective styling ❉ offer a profound metaphor for the intentionality required in crafting fair algorithms. Each step is a deliberate act of care, designed to prevent breakage, to promote health, and to honor identity.

We are called to be modern custodians of this ancestral wisdom, translating its timeless principles into the digital age. The evolution of Algorithmic Fairness is a testament to our collective capacity to learn, to adapt, and to insist on justice. It is a hopeful testament to the power of human spirit to shape technology not as a tool of oppression, but as an ally in affirming the dignity and beauty of all, especially those whose heritage has been a testament to enduring strength and breathtaking self-expression. The journey toward true Algorithmic Fairness is long, certainly, but every step taken to ensure technology truly “sees” us, truly respects our heritage, is a step closer to a future where all crowns shine brightly, fully acknowledged and celebrated.

References

- Benjamin, Ruha. Race After Technology: Abolitionist Tools for the New Jim Code. Polity Press, 2019.

- Browne, Simone. Dark Matters: On the Surveillance of Blackness. Duke University Press, 2015.

- Buolamwini, Joy, and Timnit Gebru. “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Proceedings of Machine Learning Research 81 (2018): 77-91.

- Noble, Safiya Umoja. Algorithms of Oppression: How Search Engines Reinforce Racism. New York University Press, 2018.

- Patty, John W. and Elizabeth Maggie Penn. “Algorithmic Fairness and Statistical Discrimination.” Philosophy & Theory in Biology 14, no. 0 (2022).

- Sattigeri, Pragnya, et al. “Benchmarking algorithmic bias in face recognition: An experimental approach using synthetic faces and human evaluation.” Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023.

- Shankar, Shreya, et al. “No classification without representation: Assessing gender bias in image classification using gendered objects.” Proceedings of the 2017 AAAI/ACM Conference on AI, Ethics, and Society, 2017.

- Wieringa, Ronald J. “Responsibility and bias in machine learning.” Ethical and Social Implications of Artificial Intelligence. Springer, Cham, 2020.