Fundamentals

The intricate dance of human experience, passed down through generations, finds its echo in the subtle patterns of our being, not least within the crowning glory of our textured hair. As digital advancements extend their reach into nearly every facet of our daily existence, they bring with them both promise and, at times, a disquieting reflection of societal imperfections. Within this digital realm, the concept of Algorithmic Bias stands as a stark reminder that technology, while appearing neutral, can indeed carry the invisible imprints of human prejudice and oversight.

At its very simplest, the definition of Algorithmic Bias refers to systemic, reproducible errors in a computer system that create unfair or skewed results. These distortions often arise from flawed assumptions in the algorithm’s design, unrepresentative or imbalanced training data, or the very ways in which the system is applied. Picture a lens crafted by hands unaware of the full spectrum of light; its vision of the world will inevitably miss certain hues, certain shades. This is the heart of algorithmic disparity: a technological framework unintentionally, or sometimes intentionally, failing to perceive or represent the rich diversity of human experience, particularly when it pertains to those whose heritage and appearance deviate from a presumed norm.

Consider the initial impulses that guide creation, whether it be a basket woven by ancestral hands or a complex code penned in the modern era. When these initial impulses, or the materials they draw upon, possess an inherent tilt towards one form over others, the resulting construct will mirror that inclination. The meaning of Algorithmic Bias, in this context, reaches beyond a mere technical glitch; it speaks to the echoes of historical exclusion and societal marginalization that can find new life within lines of code. It is the unintentional perpetuation of familiar inequalities, digitally amplified, shaping how certain identities are seen, understood, and even valued by systems that govern access and opportunity.

The challenge for our textured hair, a profound symbol of heritage and identity for Black and mixed-race communities, arrives when these systems are built upon datasets overwhelmingly drawn from those with straighter hair types. An algorithm trained predominantly on images of hair that hangs straight will inevitably struggle to accurately identify, classify, or even perceive the vibrant coil, the resilient curl, the intricate loc. The digital eye, unaccustomed to the nuances of shrinkage, the majesty of volume, or the myriad styles unique to textured strands, might mislabel, misunderstand, or simply overlook. This becomes a digital erasure, a silent rendering invisible of a significant portion of our global hair story.

This phenomenon extends beyond mere classification. When artificial intelligence is tasked with recommendations for hair products, virtual try-ons of hairstyles, or even facial recognition systems that may inadvertently misinterpret or misidentify individuals based on their hair’s natural form, the consequences can be more than inconvenient. They can reinforce feelings of otherness, limit access to tailored solutions, and perpetuate a narrow standard of beauty that excludes the vast spectrum of hair heritage. It is a quiet form of bias, sometimes subtle, sometimes strikingly apparent, yet always carrying the potential to ripple through lived experiences.

Algorithmic Bias, at its elemental level, is the unintended yet systemic leaning of digital systems, reflecting and amplifying the societal blind spots that have historically overlooked the textured hair of our ancestors and communities.

Unpacking the description of Algorithmic Bias involves recognizing its presence in the most unexpected corners of our digital world. Think of the ways in which everyday applications, from social media filters that smooth away natural curls to virtual meeting platforms that struggle with darker complexions and intricate hairstyles, illustrate this disparity. These are not always malicious intentions but rather the consequence of datasets that lack genuine representation, designers who may not possess a breadth of cultural understanding, or testing methodologies that fail to consider diverse user experiences.

It is a technological oversight with very human consequences, especially for those whose hair is a direct link to their lineage and unique cultural expressions. The systems, much like a historical archive compiled with a singular lens, fail to honor the full spectrum of beauty and being.

Intermediate

Moving beyond the foundational understanding, the intermediate explanation of Algorithmic Bias delves deeper into its mechanisms and the nuanced ways it entrenches itself within digital frameworks. It is not merely a random error; rather, it represents a predictable outcome stemming from particular choices made throughout the life cycle of an algorithm, from its initial conception to its deployment in the world. For our textured hair communities, this means examining how the very fabric of digital sight and assessment can become warped when the rich legacy of Black and mixed-race hair remains unrepresented or is actively miscategorized within the informational streams that feed these systems.

The roots of algorithmic disparity often lie in the datasets upon which these systems are trained. Imagine building an ancestral quilt using only scraps of one particular fabric. While beautiful in its own right, it would fail to convey the expansive palette of heritage, the diverse textures, and the varied techniques that tell a fuller story.

Similarly, if datasets for image recognition, facial analysis, or even beauty trend forecasting overwhelmingly comprise images of straight or loosely wavy hair, any system built upon them will inherit this skewed perspective. The algorithm, in its learning, develops a ‘picture’ of hair that is incomplete, thereby creating a digital echo of a long-standing societal bias that has historically marginalized textured strands.

This lack of representation is not just a passive omission; it actively shapes the algorithm’s ‘understanding.’ When a system is shown countless examples of straight hair being ‘normal’ or ‘ideal,’ and very few, or often mislabeled, examples of coils, kinks, and locs, its internal logic begins to perceive textured hair as an outlier, an anomaly, or even a ‘defect’ to be corrected. This distortion in perception, this flawed interpretation , can manifest in several ways:

- Misclassification of Hair Types ❉ Algorithms may struggle to accurately categorize natural textured hair, often mislabeling protective styles as ‘unnatural’ or failing to distinguish between different curl patterns.

- Biased Performance in Facial Recognition ❉ Studies have repeatedly demonstrated that facial recognition systems perform with significantly lower accuracy on individuals with darker skin tones and certain natural hairstyles, sometimes misidentifying or failing to identify them altogether (Buolamwini and Gebru, 2018). This often means that the very architecture of a person’s hair and facial features, especially those common in diasporic communities, becomes a point of algorithmic vulnerability.

- Limited or Inappropriate Product Recommendations ❉ E-commerce platforms and beauty apps, powered by recommendation algorithms, may suggest products or routines ill-suited for textured hair, simply because their models haven’t been trained on sufficiently diverse usage patterns or ingredient efficacies for these hair types.

Consider the pervasive nature of what some might call ‘digital colorism’ or ‘digital textureism’ when applied to beauty technology. If a virtual try-on feature for hairstyles offers a plethora of options for straight hair but only a handful for natural hair, or worse, if those few options appear distorted or unnatural, it speaks to an inherent bias in the underlying model. The delineation of desirable hair types within these systems implicitly privileges one aesthetic over another, echoing historical beauty standards that often sought to diminish or alter the natural appearance of Black and mixed-race hair.

Beyond the training data, the objectives and metrics chosen by algorithm designers can also contribute to bias. If the primary goal of an algorithm is to optimize for ‘simplicity’ or ‘uniformity’ ❉ qualities often associated with straighter hair ❉ then textured hair, with its inherent complexity, varied densities, and fluid forms, may be penalized by default. The very parameters of ‘success’ within the system can be unknowingly set to disadvantage certain hair realities. This is where the subtle layers of the algorithmic problem reveal themselves; it is not always overt discrimination but rather a subtle, sometimes invisible, tilting of the scales.

Algorithmic Bias, at a deeper level, is the silent digital propagation of societal biases, stemming from unrepresentative data and skewed design choices that consistently misinterpret or underrepresent the rich forms of textured hair heritage.

The clarification of Algorithmic Bias for textured hair thus requires acknowledging the full cycle of its creation and impact. It means questioning not just the final output but the entire upstream process: who collected the data, what was deemed ‘representative,’ who designed the metrics, and whose lived experiences were considered in the testing phase. For those of us who carry the legacy of deeply rooted hair traditions, this understanding is vital. It allows us to recognize when technology, intended to serve, instead creates new barriers or perpetuates old wounds.

It helps us advocate for systems that see us, truly see us, in all our unique and beautiful hair expressions. It challenges us to demand that the digital landscape mirrors the authentic diversity of our physical world, particularly concerning the hair that connects us to our very source.

Academic

The academic designation of Algorithmic Bias transcends a mere technical malfunction, representing a profound sociological and ethical challenge embedded within computational systems. It is the systematic and repeatable production of unfair outcomes by an algorithmic system, not as a random occurrence, but as an inherent property derived from biased inputs, flawed design principles, or inadequate evaluation metrics. This phenomenon frequently perpetuates or amplifies societal prejudices, particularly those concerning marginalized groups, often rendering invisible the rich tapestry of human diversity and contributing to systemic inequalities. When examined through the lens of textured hair heritage, Algorithmic Bias reveals itself as a complex interplay of historical marginalization, data science, and the very construction of digital perception.

The meaning of Algorithmic Bias, from an academic perspective, is rooted in the epistemology of data and the philosophy of artificial intelligence. It asserts that algorithms, far from being objective, are reflections of the worldviews, values, and inherent limitations of their creators and the data they consume. In the context of textured hair, this translates into a digital ecosystem where recognition, classification, and even aesthetic preferences are inadvertently skewed. This distortion occurs because the vast majority of training datasets for computer vision and machine learning models have historically overrepresented individuals with Eurocentric features and hair textures, thereby creating a digital ‘norm’ against which all other forms of hair are implicitly measured and often found ‘lacking’ or ‘difficult’ to process.

One salient area of inquiry lies in the performance disparity of facial recognition technologies. A landmark study by Buolamwini and Gebru (2018) from MIT’s Media Lab uncovered significant gender and racial bias in commercial facial analysis systems. Their research, utilizing the Gender Shades benchmark dataset, revealed that while these systems achieved high accuracy rates on lighter-skinned male faces, their performance plummeted drastically for darker-skinned female faces. This bias extends directly to hair, as hair texture and style are integral components of facial morphology and identity in many Black and mixed-race communities.

For instance, an algorithm struggling to accurately identify a dark-skinned woman with complex braided styles or a voluminous afro may misclassify her gender, misidentify her altogether, or simply fail to process her image. This is not merely a technical limitation; it is a manifestation of the dataset’s failure to adequately represent the diversity of human appearance, resulting in tangible disadvantages concerning surveillance, security, or even access to services.

The long-term consequences of such systemic algorithmic misrecognition are far-reaching. Imagine a future where virtual professional networking platforms, job interview analysis tools, or even healthcare diagnostic systems incorporate facial recognition. If these tools disproportionately misidentify or struggle to process individuals with textured hair, it creates an invisible barrier to opportunity and equity.

The connotation of algorithmic disparity, then, is not merely about technical error; it speaks to the insidious digital reproduction of societal exclusion, impacting economic mobility, social inclusion, and personal dignity. The very fabric of digital interaction, meant to connect and equalize, instead weaves patterns of disparity, echoing centuries-old biases against Black hair as ‘unprofessional’ or ‘unruly.’

The academic exploration of Algorithmic Bias unpacks how digital systems, through their training data and design, can become unwitting agents in perpetuating historical inequities, particularly manifest in their misrepresentation or disregard for textured hair.

The elucidation of this bias requires a multidisciplinary approach, drawing from computer science, sociology, critical race theory, and cultural studies. It necessitates a critical examination of:

- Data Provenance ❉ Where did the data originate? Who collected it? What biases were inherent in the collection process (e.g. selection bias, observer bias)? For hair-related datasets, this often means examining whether historical categorizations, sometimes rooted in pseudoscientific racial hierarchies, inadvertently informed data labeling.

- Algorithmic Design ❉ Are the features prioritized by the algorithm intrinsically biased? For instance, if an algorithm prioritizes smooth, flowing lines, it might inherently disadvantage the complex geometries of tightly coiled hair.

- Evaluation Metrics ❉ How is ‘accuracy’ measured? If accuracy is only tested against a homogeneous ‘norm,’ then the performance disparities for diverse populations, particularly those with textured hair, will remain masked or dismissed as ‘edge cases.’

- Societal Impact ❉ What are the real-world implications of these biased outputs? How do they affect the agency, self-perception, and socio-economic outcomes of individuals and communities with textured hair?

A powerful historical example that undergirds this contemporary algorithmic challenge is the insidious nature of hair typing systems. While seemingly benign, the widespread adoption of systems like Andre Walker’s hair typing chart (Type 1-4, with 4C being the tightest coil) and its subsequent academic and commercial specification have, despite intentions, contributed to a hierarchical explanation of hair, implicitly positioning straighter hair as the baseline and textured hair as increasingly ‘other.’ Even when adapted for commercial use, these categorizations, initially developed from limited samples, can lead to oversimplified or inaccurate digital representations. For example, a beauty tech company creating an AI-powered hair diagnostic tool might inadvertently bake in these historical biases if their initial datasets for ‘Type 4C’ are not robust, truly representative, and ethically sourced. The algorithmic system then inherits the partial understanding, perpetuating the challenges that many Black and mixed-race individuals face in finding products or services that genuinely understand their hair’s distinct properties.

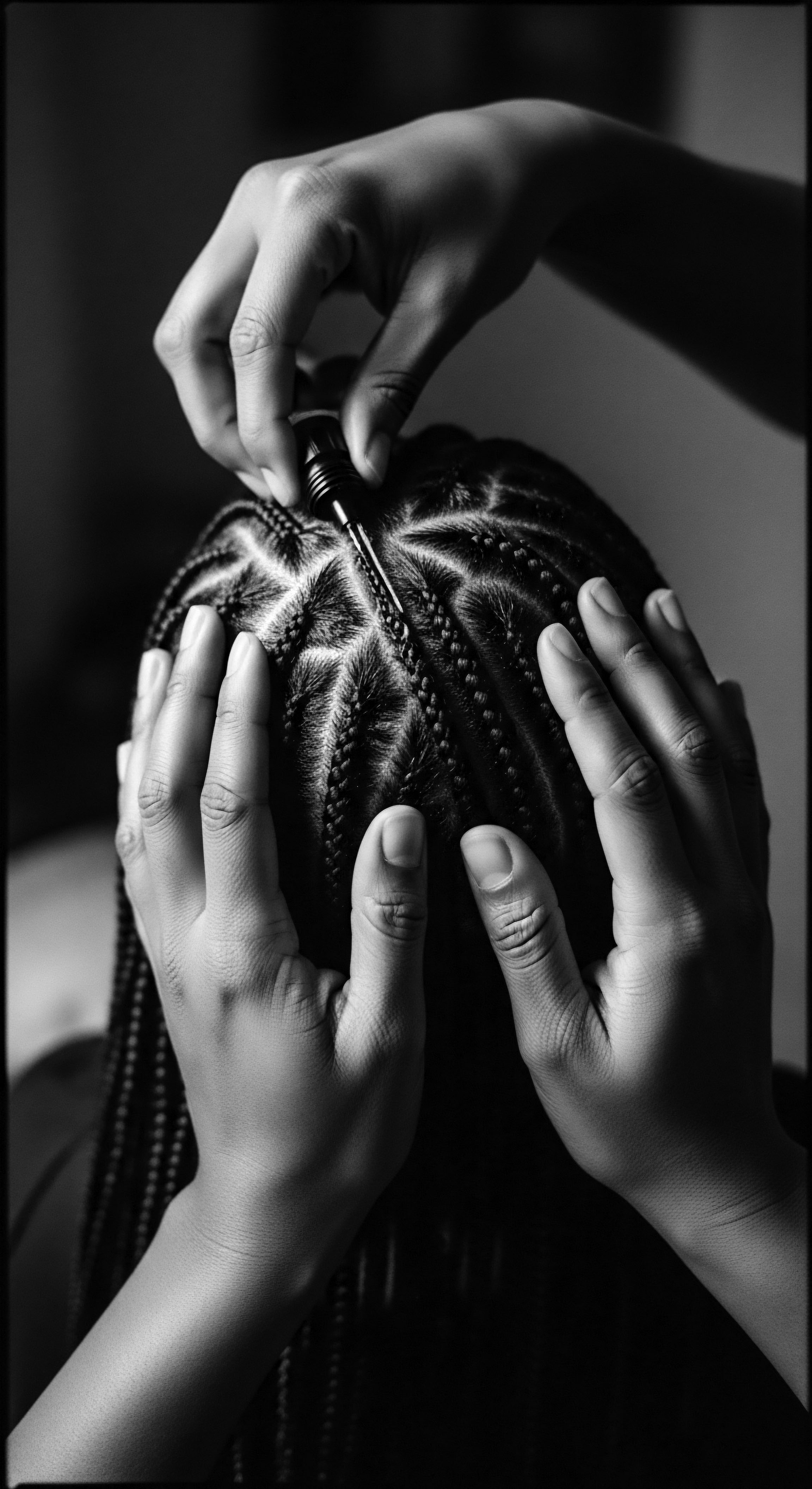

Consider further the cultural practices surrounding textured hair, from ancient braiding techniques to modern protective styles. These are not merely aesthetic choices; they are deeply ingrained cultural expressions, sometimes denoting status, marital availability, or spiritual connection within ancestral communities. An algorithm, devoid of this cultural context, might interpret these complex styles as ‘messy,’ ‘unprofessional,’ or simply ‘unrecognizable,’ leading to misjudgment in automated systems used for employment screening or educational settings. The inability of the algorithm to grasp the significance of these hairstyles reflects a deeper systemic issue: the failure to incorporate culturally sensitive data and a broader understanding of human diversity into the very foundations of artificial intelligence development.

The consequences are profound, reinforcing stereotypes and imposing a monolithic vision of acceptable appearance, thereby hindering the self-expression and cultural affirmation that hair holds. This lack of informed data collection becomes a silent bias, reflecting centuries of Eurocentric beauty ideals digitally re-encoded.

The very substance of the algorithmic issue concerning textured hair involves more than just a lack of visual data; it extends to the underlying understanding of hair biology and care. Many traditional hair practices, steeped in ancestral wisdom, focused on moisture retention, scalp health, and gentle handling ❉ principles that often contrast with modern Western hair care paradigms historically designed for straighter hair. When algorithms are trained on data derived from these dominant paradigms, they may recommend products or routines that are detrimental to textured hair. This reflects a fundamental disconnect between the algorithm’s ‘knowledge base’ and the biological and cultural realities of diverse hair types, leading to ineffective or even harmful digital guidance.

Addressing Algorithmic Bias within the context of textured hair requires a concerted effort to diversify datasets, engage ethnically diverse designers and evaluators, and adopt culturally sensitive design principles. It means moving beyond a ‘one-size-fits-all’ approach and instead striving for algorithms that are aware of, and responsive to, the rich denotation of human hair diversity. The goal is to build systems that not only accurately perceive but also truly understand the full spectrum of hair types, honoring their biological complexities and their profound cultural implication.

This is an ongoing academic endeavor, pushing the boundaries of fair and equitable AI, seeking to rectify historical injustices and foster a more inclusive digital future where every strand finds its rightful place. It is a call for digital systems to truly see, truly respect, and truly serve the vibrant, diverse beauty that has always resided in the heritage of human hair.

- Rethinking Data Collection ❉ Ethical data sourcing and the creation of comprehensive, culturally representative datasets of textured hair are paramount. This involves actively seeking out and annotating images across the full spectrum of coil patterns, densities, and styles, including protective styles, in various lighting conditions and contexts.

- Bias Detection and Mitigation ❉ Developing robust methodologies for identifying and quantifying bias in hair-related algorithms during their development phase, not just post-deployment. This includes implementing fairness metrics that specifically assess performance across different hair textures and racial groups.

- Interdisciplinary Collaboration ❉ Bridging the gap between computer scientists, anthropologists, hair stylists, and cultural historians to ensure that the understanding of hair, its biology, and its cultural significance is deeply embedded in algorithmic design. This ensures a holistic approach, moving beyond mere technical solutions.

Reflection on the Heritage of Algorithmic Bias

As we close this circle of inquiry into Algorithmic Bias, particularly as it touches the very essence of our textured hair, we find ourselves standing at a significant juncture. The journey from the primal rhythms of ancestral care, through the living traditions that shaped our beauty, to the digital currents of our contemporary world reveals a continuous thread of resilience, adaptability, and undeniable beauty. Algorithmic Bias, in its myriad forms, serves as a poignant reminder that while technology strides ever forward, it often carries with it the unconscious echoes of societal biases that have long cast shadows upon the heritage of Black and mixed-race hair.

Yet, within this recognition lies a powerful invitation: an opportunity to reclaim, to redefine, and to reshape the narratives that technology helps construct. Our hair, a living archive of history, tradition, and personal story, demands to be seen and understood in its fullness. The challenge presented by Algorithmic Bias is not a dismissal of progress but a call for more enlightened progress ❉ a progress that genuinely reflects the kaleidoscope of human experience. It compels us to demand algorithms that are not merely efficient but are also just; not merely intelligent but also wise, steeped in the knowledge and respect for diverse cultural expressions.

The deep wisdom passed down through generations ❉ the knowledge of natural emollients, the artistry of braiding, the communal rituals of care ❉ provides a profound blueprint for how we might approach the future of digital systems. It reminds us that true understanding comes from a holistic perspective, one that honors the uniqueness of each strand and the collective strength of shared heritage. The journey of our hair, from its elemental biology to its vibrant cultural expressions, has always been one of adaptability and enduring beauty. By shining a discerning light on Algorithmic Bias, we advocate for a digital future where every coil, every kink, every loc is not only seen but celebrated, understood, and rightfully honored.

This path forward is not just about correcting code; it is about honoring lineage, affirming identity, and ensuring that the digital reflections of humanity are as rich and varied as humanity itself. This is the enduring message, a tender whisper from the Soul of a Strand, reminding us that our heritage, in all its glory, must never be lost in translation, whether through the whispers of history or the logic of algorithms.

References

- Buolamwini, J. & Gebru, T. (2018). Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. Proceedings of the 1st Conference on Fairness, Accountability and Transparency, 77-91.

- Noble, S. U. (2018). Algorithms of Oppression: How Search Engines Reinforce Racism. New York University Press.

- Benjamin, R. (2019). Race After Technology: Abolitionist Tools for the New Jim Code. Polity Press.

- Eubanks, V. (2018). Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. St. Martin’s Press.

- O’Neil, C. (2016). Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Crown.

- Dumas, D. (2020). Hair Story: Untangling the Roots of Black Hair in America. St. Martin’s Griffin.

- Byrd, A. D. & Tharps, L. D. (2014). Hair Story: Untangling the Roots of Black Hair in America. St. Martin’s Press.

- Gale, R. & Lopez, C. A. (2020). Textured Hair: A Framework for Understanding and Caring for Diverse Hair Types. Journal of Cosmetic Dermatology, 19(11), 2911-2917.

- Hunter, L. (2011). Beauty, Power, and Resistance: A Global History of Hair. University of Michigan Press.