Fundamentals

The concept of AI Visual Bias, when considered through the sacred lens of textured hair heritage, points to a disharmony within artificial intelligence systems, particularly those that interpret images. At its most straightforward, AI Visual Bias names a condition where an automated system, designed to perceive and process visual data, inadvertently or systematically misrepresents, misidentifies, or overlooks certain visual characteristics. This is not a random occurrence; it stems from the very foundation of how these systems learn. They are trained on vast collections of images, and if these collections do not authentically reflect the full spectrum of human diversity, especially the glorious expanse of hair textures, the systems will carry this incomplete sight forward.

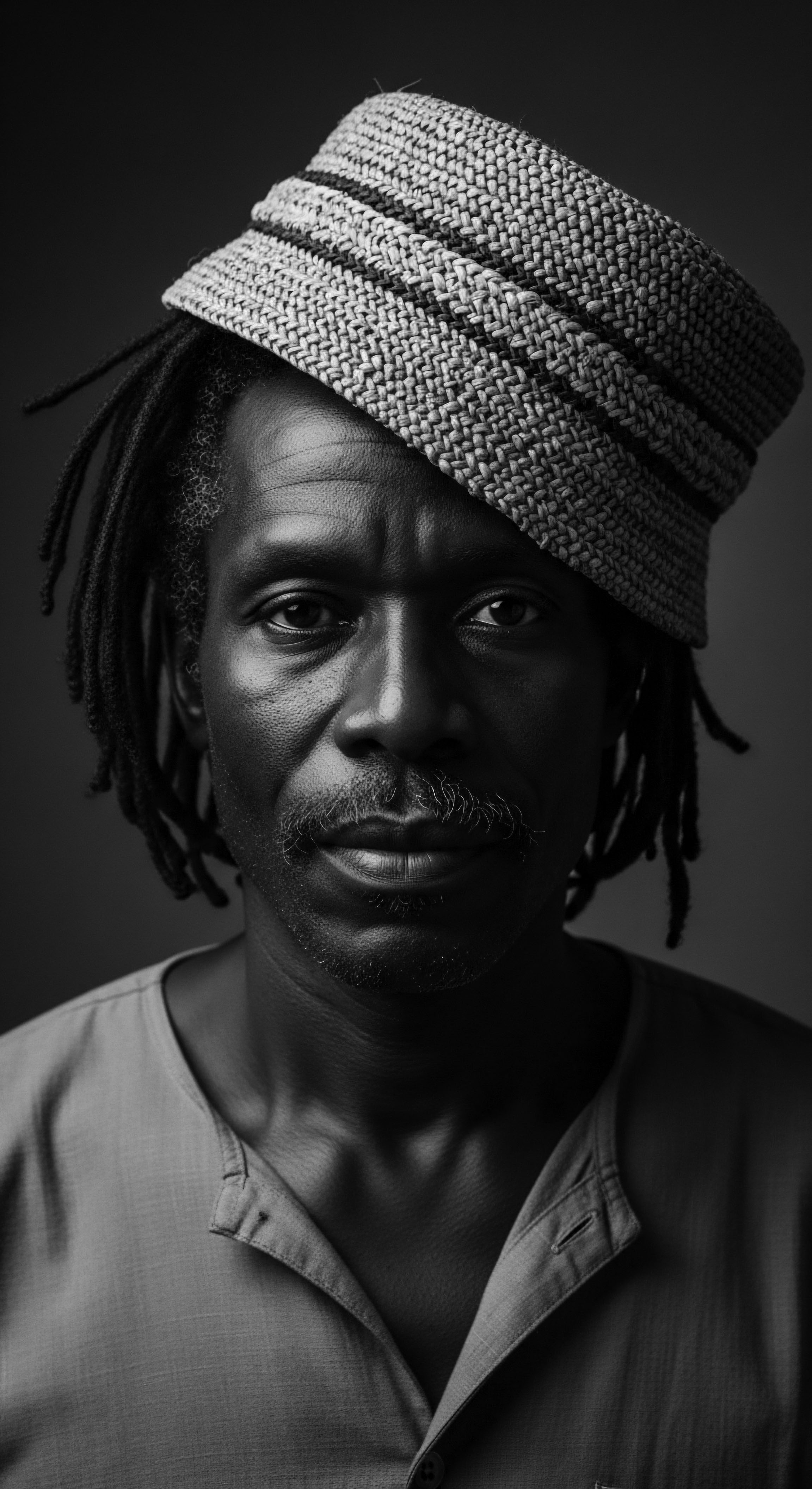

A basic understanding reveals that these digital entities learn from what they are shown. When the visual nourishment provided to an AI model is overwhelmingly homogenous, featuring predominantly Eurocentric hair types, the algorithms develop a skewed perception of what is considered “normal” or “standard” in terms of hair. Consequently, hair that deviates from this narrow visual diet ❉ be it the tightly coiled strands of Type 4 hair, the spirited waves of Type 3 hair, or the protective majesty of locs and braids ❉ can become less recognizable to the system, leading to misclassifications or even a complete lack of recognition. This oversight can manifest as an inability to accurately segment hair regions, to recommend suitable products, or even to recognize individuals themselves.

Consider a foundational principle of human perception: what we see shapes our understanding. Similarly, the digital eye of AI constructs its understanding from the pixels it consumes. When millions of images used for training primarily display straight or loosely wavy hair, the AI develops a form of “visual myopia” concerning the intricate patterns and volumes of textured hair.

This affects everything from simple image searches to more complex applications within the beauty sector. Such a system might struggle with the nuances that distinguish a Bantu knot from a simple bun, or the specific qualities of a protective style that have shielded hair for generations.

AI Visual Bias reflects a digital myopia, misrepresenting textured hair due to incomplete training data, echoing historical societal oversights.

The initial design of these algorithms also plays a part. Developers, sometimes without conscious intent, embed their own implicit visual preferences or simply replicate prevailing societal norms by selecting or curating datasets that lack comprehensive representation of diverse hair textures. This becomes a silent yet powerful force, contributing to a digital rendering of our world that is less inclusive, less accurate, and fundamentally less just for communities with rich hair traditions. It is a technological echo of long-standing biases that have marginalized textured hair in visual media and societal standards across centuries.

Intermediate

Moving beyond the initial grasp of AI Visual Bias, we delve into its deeper mechanisms, appreciating how this systemic oversight intertwines with our shared human history of perception and societal expectation. The core meaning here expands to include the methods by which visual prejudice becomes embedded within artificial intelligence. This is a complex interplay, involving not merely the quantity of diverse images but also the quality, annotation, and the very conceptual frameworks that guide AI development.

The visual data that trains AI systems is not neutral; it is a repository of human-created imagery, which inherently carries the societal gaze of its origin. For centuries, visual representations of beauty, power, and professionalism have been disproportionately centered on Eurocentric features, often marginalizing or misrepresenting textured hair. When this historical visual narrative becomes the primary training material for AI, the algorithms learn to prioritize and replicate these dominant aesthetics. This means a system might assign lower “confidence scores” to images of individuals with highly coiled or tightly braided hair, or it could struggle to discern individual features within such hair due to a lack of sufficient training examples that teach it to recognize the unique patterns and volumetric aspects.

The Echo of Omission in Data Collection

One of the most significant aspects contributing to AI Visual Bias stems from the inherent limitations and historical omissions within datasets. AI models acquire their visual understanding by analyzing countless images, learning to identify patterns, shapes, and textures. If these foundational datasets do not represent the full spectrum of human hair, particularly the vast and varied world of textured hair, the AI’s “vision” will remain incomplete.

A system trained overwhelmingly on images of straight hair may perceive tightly coiled strands as amorphous, or even as a background element, rather than a distinct, identifiable feature. This creates a representational void where certain hair types are digitally invisible or distorted.

- Data Imbalance ❉ Training datasets frequently contain a disproportionately small number of images featuring individuals with Afro-textured, coily, or tightly curled hair compared to straight or wavy hair. This skewing in data directly affects the AI’s ability to generalize and perform accurately across diverse hair types.

- Annotation Bias ❉ Even when images of textured hair are present, the way they are labeled or categorized by human annotators can introduce bias. If annotators, consciously or unconsciously, apply descriptors that align with Eurocentric beauty standards, or if they struggle to precisely categorize the numerous classifications of textured hair (e.g. distinguishing between different curl patterns within Type 3 or Type 4 hair), this human bias translates into the AI’s learning.

- Historical Precedent ❉ The bias in datasets is not arbitrary; it mirrors centuries of visual media that has historically marginalized and misrepresented Black and mixed-race hair. Photography, film, and advertising have long privileged certain hair types, creating a visual legacy that AI now inherits and, without careful intervention, perpetuates.

Beyond the mere presence or absence of images, the meaning also includes the inherent bias in how hair is perceived and categorized even in human society. Studies have revealed that textured hair, particularly natural Afrocentric styles, has faced discrimination in professional and educational settings, being deemed “unprofessional” or “unacceptable”. This societal conditioning, when translated into the human curation of AI training data, subtly guides the AI to associate certain hair types with negative or less desired attributes, or simply to not recognize them as valid or complete.

Algorithmic Reinforcement of Visual Norms

Once the data is ingested, the very algorithms designed to learn from it can reinforce existing visual norms. These algorithms often prioritize patterns that appear most frequently, thus amplifying the dominance of certain hair textures within their learned models. This is not necessarily malicious, but rather a reflection of statistical learning applied to biased inputs. A system might, for example, be optimized for speed or efficiency, and in doing so, it may inadvertently sacrifice accuracy for underrepresented visual categories, such as the diverse range of coily and curly hair patterns.

AI systems, fed by imbalanced datasets, perpetuate a visual language that overlooks the intricate variations of textured hair.

The consequences of this algorithmic reinforcement extend into practical applications. In the beauty industry, AI-powered tools that offer virtual try-ons or personalized product recommendations might struggle with or entirely exclude options for textured hair, simply because the underlying models do not possess a sufficiently rich visual understanding of these hair types. This further entrenches the perception that textured hair is an outlier, rather than an integral part of the human visual landscape. It is a modern manifestation of an ancient challenge: how to truly see and honor the full breadth of human appearance, especially when it diverges from established, often exclusionary, norms.

Academic

To delve into the academic meaning of AI Visual Bias is to dissect a phenomenon that extends beyond mere technical glitch, revealing itself as a deeply embedded structural inequity within computational systems, particularly as it pertains to the visual representation of textured hair. This is a complex interplay of historical visual subjugation, contemporary data imbalances, and algorithmic design choices that collectively diminish the recognition, accurate portrayal, and cultural integrity of Black and mixed-race hair experiences.

AI Visual Bias, in an academic context, represents a systematic statistical skew or discriminatory outcome in artificial intelligence systems’ interpretation and generation of visual information, stemming from unrepresentative, historically biased, or improperly labeled training datasets and compounded by algorithmic architectures that prioritize dominant visual patterns. Its meaning is rooted in the computational reproduction of societal biases, where the machine ‘learns’ to perceive and categorize visual attributes, such as hair texture, through a lens conditioned by existing power structures and aesthetic hierarchies that have historically marginalized Black and mixed-race hair. The consequence is a technological mirroring of visual prejudice, where the digital realm perpetuates the cultural erasure and misrecognition of a rich hair heritage, hindering fair access to services, accurate representation, and the affirmation of identity for affected communities.

Echoes from the Source: Historical Roots of Visual Erasure

The challenge of AI Visual Bias in relation to textured hair is not an isolated modern problem; it is a contemporary manifestation of a deeply rooted historical visual bias. For centuries, systems of representation, from early portraiture to mass media, have struggled to accurately, respectfully, or even sufficiently portray the multifaceted beauty of Black and mixed-race hair. Early photography, for instance, often optimized for lighter skin tones and straighter hair, inadvertently rendering darker complexions and intricate hair textures less visible or distorted in its nascent visual records. This historical omission created a visual deficit, a silence in the archive that AI systems now inherit.

Traditional African societies, conversely, held hair as a potent symbol of identity, social status, spiritual connection, and collective history. Hairstyles conveyed detailed information about one’s age, marital status, tribal affiliation, and even life events. The act of hair styling was communal, a tender thread connecting individuals to their lineage and community.

AI Visual Bias is a modern digital echo of historical visual prejudice, where the machine ‘learns’ the same societal oversights regarding textured hair.

This rich visual language of hair, however, was violently disrupted during the transatlantic slave trade. The forced shaving of heads upon arrival in the Americas was a deliberate act of cultural stripping, a brutal severing of identity and heritage. This dehumanizing practice initiated a long legacy of hair discrimination, where Afro-textured hair was denigrated and often deemed “unruly” or “unprofessional” in Western contexts.

The imposition of Eurocentric beauty standards led many to chemically alter their hair, seeking assimilation in oppressive environments. These historical narratives contribute to the underlying datasets for AI, where images of textured hair may be underrepresented, mislabeled, or simply perceived through a biased cultural lens.

The consequence is that AI systems, trained on this uneven visual terrain, inadvertently learn a version of history that has already been edited for a dominant perspective. They replicate the visual hierarchies of the past, making the recognition of textured hair more computationally challenging and prone to error.

The Tender Thread: Algorithmic Misrecognition and Its Impact

At the core of AI Visual Bias lies the algorithmic misrecognition of hair texture. AI models, particularly those using computer vision, break down images into features like edges, colors, and textures. For hair, this means analyzing curl patterns, volume, sheen, and density.

When training data is scarce for specific hair textures, the algorithms fail to build robust internal representations for those features. This leads to quantifiable disparities in performance.

A specific case that powerfully illuminates this connection to textured hair heritage and Black hair experiences arises from the landmark work of Joy Buolamwini and Timnit Gebru. While their seminal 2018 paper, “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” primarily exposed racial and gender bias in facial analysis systems, its implications extend directly to the visual identification of individuals with textured hair. Their research revealed that commercial gender classification systems exhibited significantly higher error rates for darker-skinned women, with error rates reaching up to 34.7% for this demographic, compared to a mere 0.8% for lighter-skinned men.

While the study focused on gender and skin tone, the mechanisms causing these disparities directly relate to the challenges AI faces with diverse hair textures. The underlying issue was the lack of diverse representation in the training datasets used to build these systems; they were overwhelmingly skewed towards lighter-skinned males. When AI systems struggle to even accurately detect a face or classify gender in darker-skinned individuals, it inherently struggles with the features associated with those individuals, including their hair. A follow-up study on neural compression for facial images specifically noted that “phenotype groups of darker skin tones, wide noses, curly hair, and monolid eye shapes suffer the most adverse impact in the facial recognition tasks”.

This explicit connection highlights that the bias is not merely skin-deep but extends to critical identity markers such as hair texture, which is often tightly linked to racial and ethnic identity. The systems were not adequately trained to recognize the distinct patterns and volumetric properties of textured hair, leading to errors in detection and classification that were amplified for those with kinky or coily hair, which is statistically more prevalent among darker-skinned individuals.

The implications for individuals are significant. Imagine a scenario where an AI-powered styling app, designed to recommend hairstyles or products, consistently fails to recognize Type 4C coils or dreadlocks, offering only options for straightened or loosely curled hair. Such an experience can be deeply disheartening, a technological re-affirmation of historical exclusions. It reinforces the subtle yet powerful message that one’s natural hair, a profound connection to ancestral practices and identity, is not “seen” or valued by the prevailing digital gaze.

Beyond aesthetic applications, the meaning extends to critical domains like security and healthcare. If facial recognition systems, which often rely on overall head shape and features including hair, perform poorly for individuals with textured hair, it can lead to misidentification, false arrests, or denied access. In health tech, AI systems designed to analyze scalp conditions or hair health might lack the nuanced understanding required for textured hair, potentially leading to inaccurate diagnoses or inappropriate product recommendations.

The Unbound Helix: Reclaiming Visual Sovereignty and Ethical Innovation

The academic exploration of AI Visual Bias moves beyond identification to consider solutions rooted in restorative practices and cultural responsibility. This involves a commitment to building AI systems that actively acknowledge and celebrate the visual diversity of textured hair. The path forward demands an intentional re-education of algorithms, starting with the very datasets they learn from.

- Curating Representative Datasets ❉ A foundational step involves the creation of expansive, ethically sourced datasets that contain a truly proportional representation of all hair textures, particularly Afro-textured hair, in its myriad forms and styles. This cannot be a superficial inclusion; it must capture the subtle variations of curl patterns, density, sheen, and the cultural context of various styles like cornrows, Bantu knots, locs, and Afros. This requires collaboration with communities and individuals whose hair heritage is being represented.

- Bias Mitigation in Algorithmic Design ❉ Developers must implement deliberate strategies within algorithmic design to detect and correct for bias. This could involve techniques like “fairness metrics” that specifically measure performance across different hair types or demographic groups, ensuring that improvements in one area do not come at the expense of accuracy in another. It calls for a move away from simply optimizing for overall accuracy towards optimizing for equitable accuracy across all represented groups.

- Intersectional Awareness in Development ❉ A holistic approach necessitates acknowledging the intersectionality of identity. Bias does not exist in isolation; it often compounds across race, gender, skin tone, and hair texture. AI developers need to be trained in understanding these complexities, ensuring that systems are designed with an awareness of how different identity markers intersect to shape experiences of bias. This includes active participation from diverse teams in the development process, ensuring that varied perspectives inform the very creation of these technologies.

The integration of culturally informed perspectives is paramount. Scholars and practitioners from fields such as anthropology, sociology, and Black studies can offer invaluable insights into the historical and social constructions of hair, guiding AI development towards systems that are not just technically proficient but also culturally competent and ethically sound. For instance, understanding the nuanced differences in hair care routines, styling techniques, and cultural significance across the diaspora ❉ from West African threading rituals to Caribbean natural hair movements ❉ is essential for building AI that truly understands and respects these traditions.

Consider the work needed to train AI models for hair health analysis. A 2022 study on AI-powered hair analysis highlighted the necessity for “training across diverse hair types, textures, and skin tones to avoid bias”. Without this intentional inclusion, such systems might miss critical indicators of hair health or misinterpret conditions specific to textured hair, potentially leading to inadequate or even harmful recommendations. The study also acknowledges the challenges of image quality and consistency, which can be even more pronounced for hair types that are volumetrically complex or reflect light differently.

Ethical AI development for hair demands representative datasets, intersectional design, and collaborative wisdom to honor hair’s ancestral narrative.

The shift towards more equitable AI visual systems represents an invitation to decolonize digital perception, to move beyond a limited, Eurocentric default, and instead, to create technologies that truly see and affirm the full, vibrant spectrum of human visual identity. This endeavor is not merely about technological refinement; it is a profound act of cultural validation, a conscious effort to ensure that the digital future does not erase the rich visual past of textured hair heritage. The academic pursuit here is a call for a responsible and culturally sensitive approach to AI, one that actively contributes to a world where all hair stories are seen, valued, and understood.

Reflection on the Heritage of AI Visual Bias

As we close this contemplation of AI Visual Bias, particularly through the prism of textured hair, we are reminded that technological advancements, like the strands of our own hair, are deeply rooted in their origins. The meaning of AI Visual Bias is not confined to lines of code or statistical models; it breathes with the very history of how we have seen, or failed to see, the beauty in diverse human forms. Each curl, coil, and wave carries an ancestral story, a lineage of care, resilience, and identity that has persisted across continents and generations, weathering storms of systemic bias and cultural denigration.

The journey to understand AI Visual Bias is, in essence, a journey into understanding our shared human narrative, specifically the chapters often overlooked or deliberately silenced. We have learned that the silicon gaze, left unchecked, can merely echo the historical biases of the human eye, perpetuating a visual world where textured hair, with its inherent variations and rich cultural symbolism, remains in the periphery or is misconstrued. This is a profound recognition that the algorithms we build are not neutral; they are imbued with the societal consciousness and historical imprints of their creators and the data they consume.

Yet, within this recognition lies a powerful possibility for healing and restoration. The conversation around AI Visual Bias becomes a call to action, an invitation to weave new patterns into the digital loom, ones that celebrate every strand, every texture, with the reverence it deserves. We are prompted to cultivate systems that do not just process pixels but perceive the spirit within, honoring the deep spiritual and cultural connections that hair holds for Black and mixed-race communities.

The “Soul of a Strand” ethos encourages us to remember that hair is not just a biological attribute; it is a living archive, a repository of ancestral wisdom, ritual, and communal memory. When AI systems fail to accurately render textured hair, they are not merely making a technical error; they are, in a deeper sense, missing a fragment of this living archive, a piece of a story that deserves to be seen and held with dignity. Addressing AI Visual Bias is therefore an act of preservation, a commitment to ensuring that the digital future does not inadvertently erase the heritage that has shaped us.

It is about crafting technology that reflects the authentic, boundless beauty of humanity, ensuring that every individual, regardless of their hair’s glorious texture, is truly seen, understood, and celebrated in the digital reflections of our world. This work is an ongoing conversation, a tender thread that connects scientific inquiry with cultural wisdom, seeking a future where technology serves to uplift and affirm all of humanity’s rich visual heritage.

References

- Buolamwini, J. & Gebru, T. (2018). Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. Proceedings of the 1st Conference on Fairness, Accountability and Transparency, 77-91.

- Koval, C. Z. & Rosette, A. S. (2020). The Natural Hair Bias in Job Recruitment. Social Psychological and Personality Science, 11(8), 1085-1093.

- Tharps, L. M. & Byrd, A. (2014). Hair Story: Untangling the Roots of Black Hair in America. St. Martin’s Press.

- Mercer, K. (1994). Welcome to the Jungle: New Positions in Cultural Studies. Routledge.

- Rooks, N. M. (1996). Hair Raising: Beauty, Culture, and African American Women. Rutgers University Press.

- Sieber, R. & Herreman, F. (2000). Hair in African Art and Culture. Museum for African Art.

- Johnson, D. & Bankhead, T. (2014). Hair and the Black Woman: A Sociological and Historical Examination. Journal of Black Studies, 45(1), 87-104.

- Paterson, M. (2006). The Senses of Touch: Haptics, Affects and Technologies. Berg Publishers.

- Monroe, J. (2019). The Ethics of Artificial Intelligence in Marketing. Journal of Business Ethics, 159(3), 859-869.

- Yucer, A. & Böhme, R. (2022). Gone With the Bits: Revealing Racial Bias in Low-Rate Neural Compression for Facial Images. arXiv preprint arXiv:2205.02983.